+ TCP uxNxWinSize

Could I get a bit an explanation on WinProperties_t Rx and Tx WinSize?

Coming from a different IP stack, I’m having a hard time following why this is specified in MSS.

For example, I have a socket that I want the rx buffer size at 1,048,576, or 1mb. This would translate to a window scale of 4 in tcp language. However, 1048576 / 1460 = 718, so I’m not following exactly how this number is being used, as the examples don’t use numbers this high.

+ TCP uxNxWinSize

A TCP conversation is parametrised by a few variables:

- MSS, Maximum Segment Size, which is the size of the TCP payload. Often this is 1460 bytes long

- TCP window sizes

- TCP buffer sizes ( which is an internal thing )

+ TCP uxNxWinSize

My communication is not on a lan, it is a streaming audio device, and really needs to work without skips where internet isn’t so great. A larger tcp window combined with other internal buffering really helps. Perhaps 1mb is overkill, but it has worked.

I think I follow now, so really window scaling happens depending on the WinSize. The microchip stack I have been using has it that the tcp buffer size is the same as the window size, thus this seemed confusing that the window size is smaller than the buffer size.

+ TCP uxNxWinSize

You don’t need a TCP window as big as your buffering time. You could buffer a couple of seconds of video in your application, while the TCP layer uses a smaller window. This might actually improve performance as if an earlier packet was lost you want to re-request it before you actually need it, so you can have it (you don’t need much more buffer than the round trip delay time). The additional application buffer smooths out the varying quality of the connection, No amount of buffering (except down load the whole stream then play it) will get you skipless streaming if the connection can’t handle the needed rate, at least on average.

+ TCP uxNxWinSize

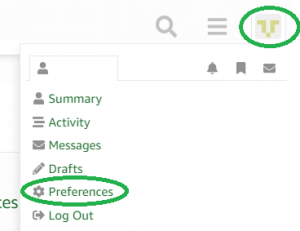

The microchip stack … the tcp buffer size is the same as the window sizeThat is probably where your confusion comes from. In +TCP, these parameters are separated. Richard Damon is right: it is more advantageous to have a reasonable sized TCP window, and keep audio data buffered. It is up to you how much data must be in the buffer before you start the player. The FreeRTOS+TCP stack can buffer TCP data for you. You can poll how much data is available in the buffer with this function: ~~~ BaseTypet FreeRTOSrxsize( Sockett xSocket ); ~~~ My advice for your streaming client would be the following: ~~~ xWinProperties.lRxBufSize = 1024 * 1024; /* 1 MB, or more / xWinProperties.lRxWinSize = 12; / 12 packets / xWinProperties.lTxBufSize = 6 * 1024; / 6 KB / xWinProperties.lTxWinSize = 2; / 2 packets */ ~~~ You may want to wait until the RX buffer is filled 80% and start playing the sound. If your Internet connection is not so good, the IP stack may decide to decrease the size of the advertised TCP window. Using a large TCP window may lead to a chaotic conversation with many retransmissions and Selective Acknowledgements ( SACK’s ). If there are many dropped packets, a window size of 4 x MSS or less would be better.

+ TCP uxNxWinSize

Have you tried the option

ipconfigNIC_LINKSPEED_AUTODETECT? That worked for me, Zynq gets a 1 Gbps connection.

I’ll attach the latest +TCP driver for Zynq, just to make sure it will also work for you.